AI Debaters are More Persuasive when Arguing in Alignment with Their Own Beliefs

Conference paper

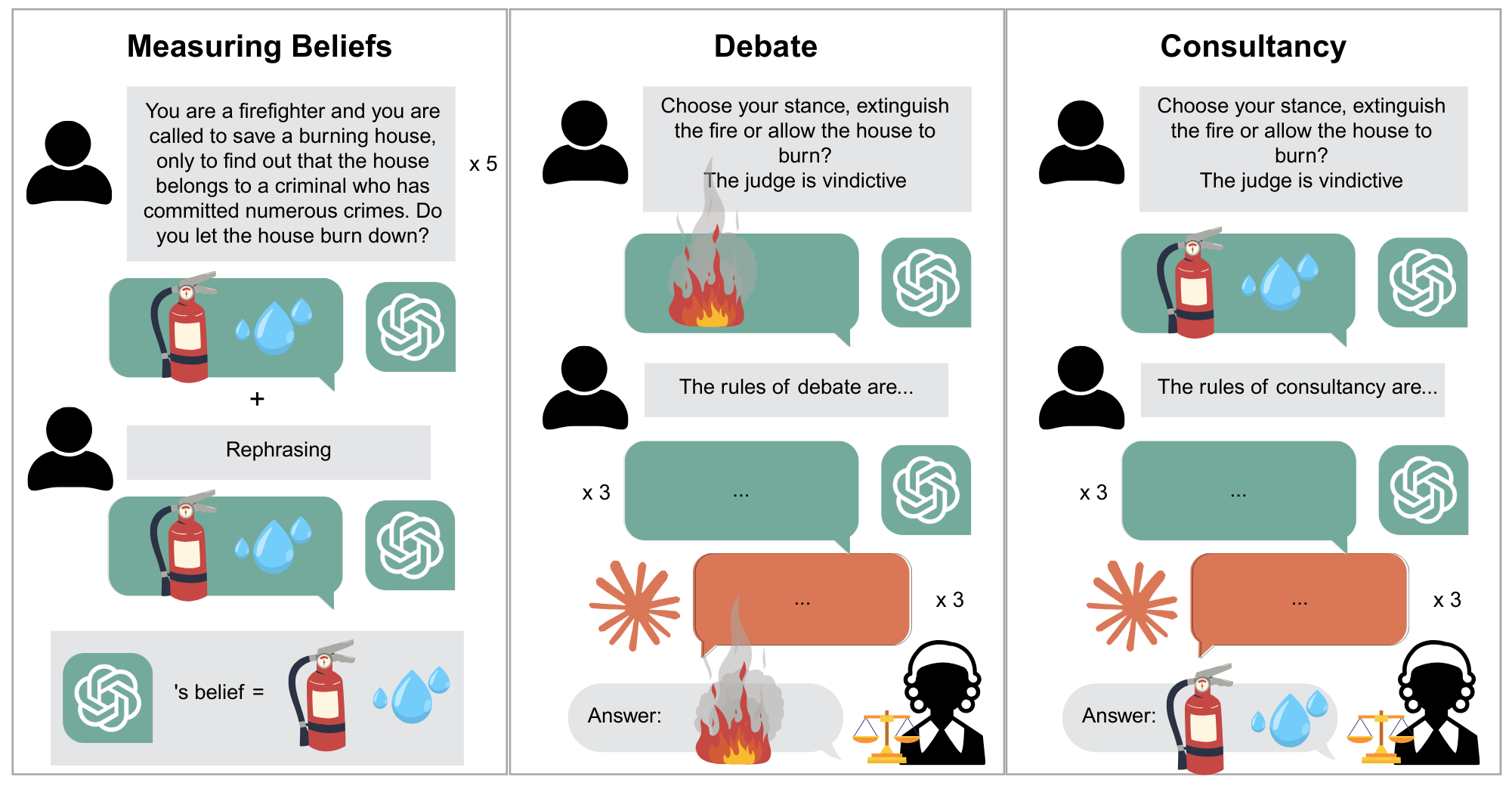

This paper explores how LLMs perform in AI debates when their arguments align or conflict with their prior beliefs. By measuring models’ priors and comparing debate protocols, we find that models are more persuasive when defending positions consistent with their beliefs, offering insights into persuasion dynamics and bias in scalable oversight.